Spark

安装

cd /usr/app wget https://archive.apache.org/dist/spark/spark-2.0.0/spark-2.0.0-bin-hadoop2.7.tgz tar -zxvf spark-2.0.0-bin-hadoop2.7.tgz spark-2.0.0-bin-hadoop2.7添加环境变量

vi /etc/profile # 添加以下内容 export Spark_HOME=/usr/app/spark-2.0.0-bin-hadoop2.7 export PATH=$PATH:$JAVA_HOME/bin:$HADOOP_HOME/bin:$HBASE_HOME/bin:$HIVE_HOME/bin:$Fl ume_HOME/bin:$Spark_HOME/bin:$Scala_HOME/bin

配置./conf/slaves

/usr/app/spark-2.0.0-bin-hadoop2.7/conf/ # 首先将 slaves.template 拷贝一份 cp -r slaves.template slaves # 修改 slaves 文件,添加 hadoop11 hadoop12 hadoop13

配置./conf/spark-env.sh

#将 spark-env.sh. template 拷贝一份 cp -r spark-env.sh.template spark-env.sh vi /conf/spark-env.sh #添加以下内容 export JAVA_HOME=/usr/app/jdk1.8.0_77 export Scala_HOME=scala-2.11.11 export SPARK_MASTER_IP=hadoop11 export SPARK_WORKER_MEMORY=2g export MASTER=spark://hadoop11:7077将 spark-2.0.0-bin-hadoop2.7 文件夹拷贝到另外两个结点

scp -r /usr/app/spark-2.0.0-bin-hadoop2.7 root@hadoop12:/usr/app scp -r /usr/app/spark-2.0.0-bin-hadoop2.7 root@hadoop13:/usr/app

编辑其余两台的环境变量

export Spark_HOME=/usr/app/spark-2.0.0-bin-hadoop2.7 export PATH=$PATH:$JAVA_HOME/bin:$HADOOP_HOME/bin:$HBASE_HOME/bin:$Spark_HOME/bin:$S

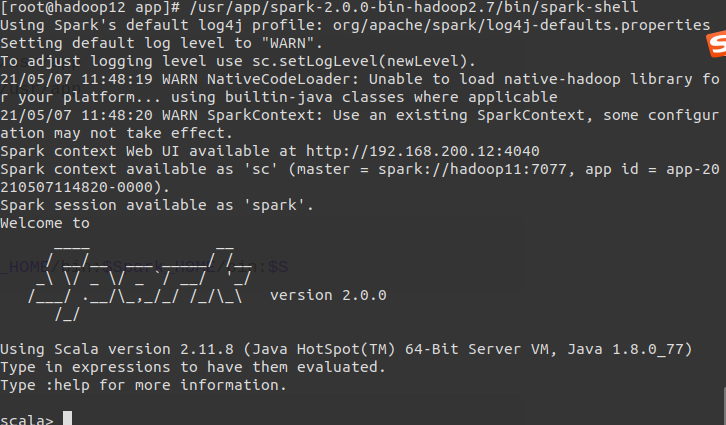

启动

/usr/app/spark-2.0.0-bin-hadoop2.7/sbin/start-all.sh #启动Spark集群 /usr/app/spark-2.0.0-bin-hadoop2.7/bin/spark-shell #启动spark-shell